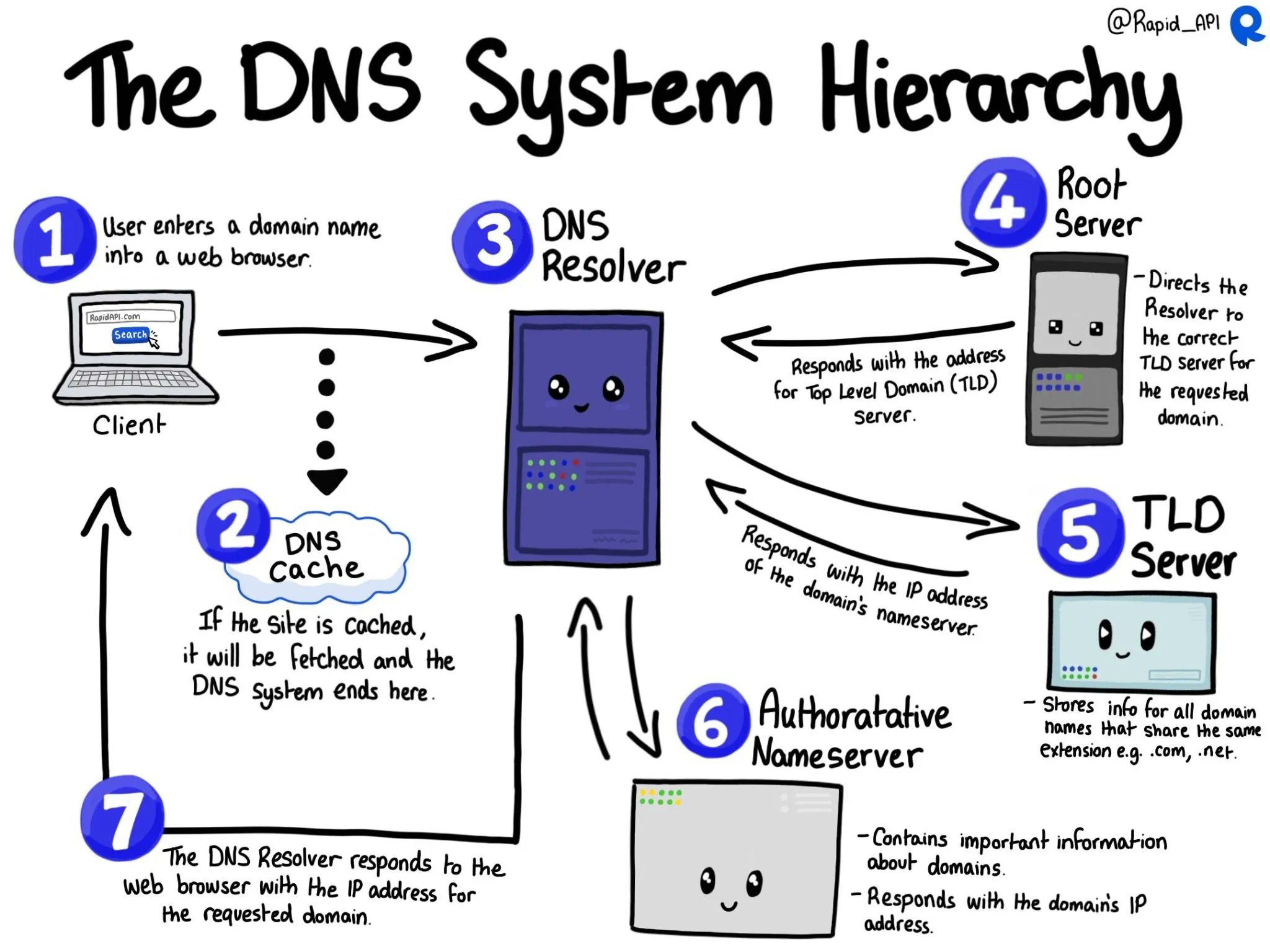

A Brief History The story of modern language models is like watching a family tree grow, with each new generation learning from the triumphs and mistakes of those who came before. Let’s trace this fascinating journey from 2017 to today. 2017: The Transformer Revolution The story begins with a research paper titled “Attention Is All […]

Remember how we compared a language model to an advanced autocomplete system? Well, here’s where the story gets interesting. Not all language models learn the same way. Imagine two students sitting in the same classroom, both brilliant, but each taught to master language through completely different methods. One becomes an incredible writer, the other becomes […]

Think of a language model as a really sophisticated autocomplete system. You know how your phone suggests the next word when you’re texting? A language model works on that same principle, but at a much more advanced level. At its core, a language model is a program that has read millions of books, articles, and […]

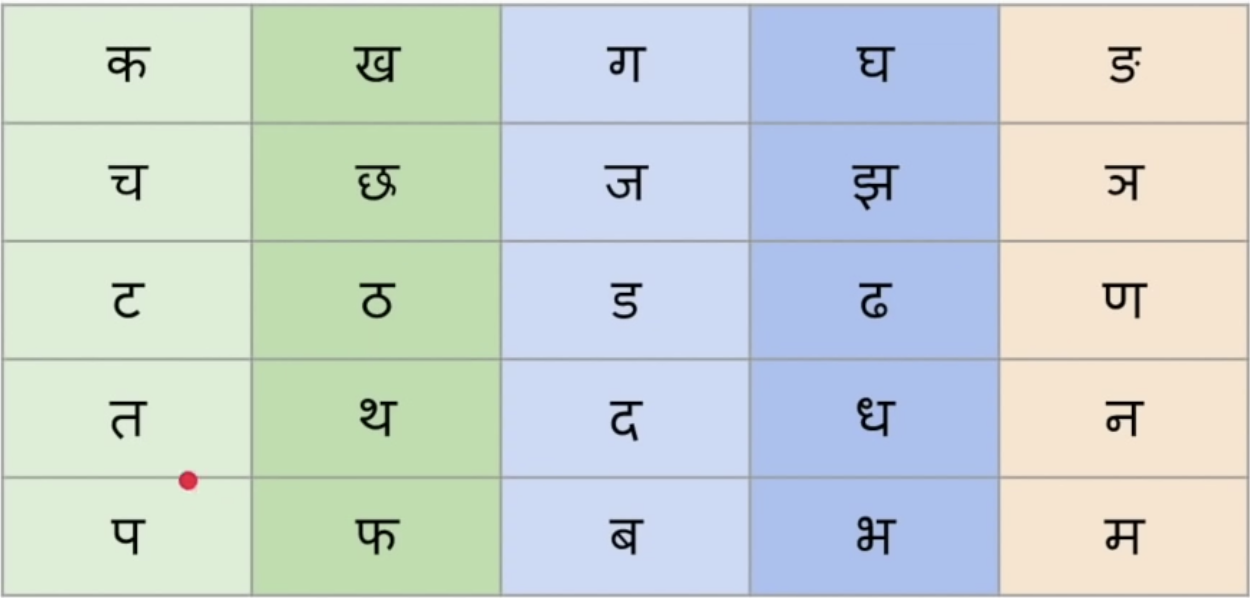

Understanding how computers “read” and understand text is a fascinating field. One of the most fundamental techniques for identifying important keywords in a document, relative to a collection of documents, is TF-IDF (Term Frequency-Inverse Document Frequency). I recently embarked on a gamified learning challenge to demystify TF-IDF, breaking it down into its core components. This […]

| Cookie | Duration | Description |

|---|---|---|

| cookielawinfo-checkbox-analytics | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Analytics". |

| cookielawinfo-checkbox-functional | 11 months | The cookie is set by GDPR cookie consent to record the user consent for the cookies in the category "Functional". |

| cookielawinfo-checkbox-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-others | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Other. |

| cookielawinfo-checkbox-performance | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Performance". |

| viewed_cookie_policy | 11 months | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |